Think biometrics protect you from hacking…think again?

| 13-07-2022 | By Paul Whytock

When it comes to providing personal security, it’s fair to say that biometrics are pretty good but not infallible. Biometrics are vulnerable to numerous hacking methods, including the dramatically named threat acting, but more on that later.

The problem is that just like any security information, biometric data has to be held somewhere, and it has to be available if it is to serve as a biometrics password authentication method. This makes it hackable, and cyber thieves are getting better at beating biometrics.

There is also another fundamental flaw when it comes to biometric data; it’s immutable. This means that, unlike traditional passwords, it cannot be changed should there be some security alert regarding biometric id theft of your data.

Take iris scanning as an example. This identity authentication method uses visible and near-infrared light to create a high-definition image of a person’s eye. Your iris has over 250 unique characteristics compared to your fingerprint, which has about 45.

Not surprisingly, then, more and more identity authentication processes are using an iris scan.

But there are security weaknesses even with something this sophisticated and some are asking how secure is biometric authentication? For example, if a database of biometric information is compromised, then it is not simply a task of deleting existing data and re-writing new security codes.

Several factors come into play that provides potential hacking avenues for cybercriminals. Firstly, biometric data is often stored by third-party vendors. The point here is that a third party is, in effect, an individual or a company contracted to provide services to consumers on behalf of another organisation. This means that biometric data can be stored by many different organisations, and in some instances, their data security may have a weakness that is an invitation to hackers.

Secondly, biometric authentication systems have not been around as long as password-based systems. Consequently, they suffer from more system bugs and because of this, false positives or system denials occur. The security risk here is that the wrong person can possibly gain access to data due to a false positive identification.

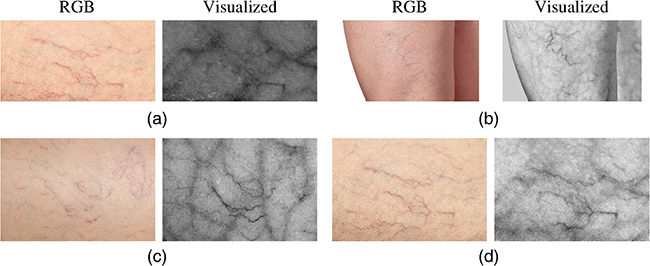

But biometrics is not all about iris scanning. Biometrics involves various identification methods that depend on a human’s anatomical and structural makeup. Things like fingerprints, vein and artery patterns, iris spots, voice patterns and even how someone moves when they walk or work at their computer.

Algorithm Errors

Recognising and analysing these forms of biometric information requires pattern recognition algorithms, but the problem is that these can be susceptible to two forms of operational error, the false accept and the false reject.

In the first instance, an unauthorised person is mistakenly allowed access. In the second, an authorised person is erroneously rejected from the system. Typically, fine-tuning a biometric system’s parameters can decrease the false acceptance rate at the cost of increasing the false rejection rate, making the system more restrictive.

A high false acceptance rate jeopardises the system’s security, while a high false rejection rate reduces user satisfaction. As both the false acceptance and false rejection rates are variable, the overall accuracy of a biometric system is measured by the crossover error rate, using the parameter set in which both errors are equal.

Citizen’s Rights

There are other concerns surrounding biometric security systems and what effects it has on citizens, which are not directly connected to hacking but involve civil liberties and personal privacy. It may be possible to scan irises from a distance, meaning that data could be dishonestly collected.

A research team from New York University recently created an artificial intelligence platform that successfully recreated full fingerprints from partial prints. These created fingerprints could successfully pass through a biometric authentication system on one-in-five occasions.

Another social factor is that biometric authentication systems can often be biased against users who cannot easily submit biometrics. This includes people who may have experienced a change in their biometric details due to injury. For example, a badly cut finger may lead to scarring that makes a fingerprint unrecognisable, and an eye injury resulting in cornea erosion syndrome could cause problems with iris scanning.

Long-Term Identity Loss: Can Biometrics be Hacked?

In addition to those errors that are inherent to pattern recognition systems, Identity Management Systems (IAM) systems based on biometric factors can be hacked and subverted like any IAM system.

A hacked biometric factor can never be replaced, and that individual will forever have to live with a biometric feature that does not conclusively prove his or her authenticity.

In addition, biometric information is understandably classified as personal data and is subject to General Data Protection Regulations (GDPR) as specified by the European Commission.

GDPR protection applies regardless of the technology used for processing biometric data, and it doesn’t matter how the data is stored, in a data bank, through video surveillance or on paper.

Because of this, hacking a bank for biometric data is not only a threat to the security of a system that uses that data for authentication but can also be an expensive legal action for the company involved because of the legal responsibility of safeguarding personal data under GDPR regulations.

How Do They Do It?

There are many successful invasions of biometric security systems, including conventional cybercrime methods and sophisticated techniques involving the creation of artificial biometric fingerprints and face masks and false voice recognition data.

Most cybercriminals are classified as threat actors. They frequently perpetrate ransom-based attacks that won’t release kidnapped biometric data until the money is paid. The sad fact is they are pretty profitable as the ransoms are usually paid, and the police are not informed. Their attack methods include phishing, ransomware and malware.

Next comes insider crime which is typically when an employee or third-party contractor wants to infiltrate organisational data or disrupt key processes. Their actions are usually aimed at maliciously damaging an organisation’s cybersecurity infrastructure.

There are also some very novel examples of biometric data hacking. For instance, the renowned German data security specialist Herr Krissler used a finger smudge from a smartphone surface and constructed an artificial finger with fingerprints using conventional wood glue and graphene. That was used to break the iPhone 5 TouchID authentication function a few years back.

Amazingly he is also known for the truly audacious stealing of European Commission President Ursula von der Leyen’s fingerprint using high-definition photographs taken during an official event and replicating the fingerprint with durable latex.

Early biometric facial recognition systems could be tricked by showing a portrait photo of an authorised person. But facial security systems were improved, and authentication was only granted if minimal movements proved that the face in front of the camera was alive.

However, it has been demonstrated that proof of life relative to facial authentication can be simulated by moving a pen over the portrait photo of an authorised person.

This kind of attack requires knowledge about the identities of authorised persons and a facial portrait photo, but researchers at Tel Aviv University recently developed a new type of attack on facial recognition systems that works without the need for an individual’s photograph. They constructed a “master face” with a relatively high success rate of unlocking any facial recognition system.

This situation can happen because the machine learning algorithms behind facial recognition do not classify faces using all available information. What they do is use particular subset features. Facial images can be optimised, so these features are as similar as possible to the average of all faces in a population. These master face configurations are said to have a 24% success rate.

But it’s not only facial biometrics that is vulnerable. Researchers have shown that voice recognition can be hacked if the threat actor has a sufficiently large and varied set of voice examples. The samples are then analysed and modified by computer to replicate speech. Chinese researchers have demonstrated the ability to send ultrasonic messages to voice recognition tools like Amazon Alexa.

A relatively new form of biometric security involves behavioural biometrics that authenticates users based on behavioural patterns. One method identifies unique, individual ways in which people type and move when using their computer rather than identifying parts of their bodies such as fingerprints or irises or objects such as security passes or knowledge-based security methods such as passwords.

Unlike traditional authentication methods, which authenticate only when access is initiated, behavioural biometrics technologies constantly monitor a person’s body movements.

According to cybercrime specialists and intelligence unit Intel 471, bypassing such identification is becoming more targeted by cybercriminals.

Looking at one example of this, an unnamed cyber-criminal noticed that some banks had implemented random forest algorithms to cut costs. Random forest is a flexible, easy-to-use machine learning algorithm that reduces the cost of a popular digital identity subscription service.

This less effective encryption strategy made it easier for the criminal to reset behavioural pattern parameters and penetrate protected data areas.

As a result, this particular threat actor was able to bypass the 2FA authentication system. The 2FA (Two Factor Authentication) app is a simple and free application which generates Time-based One-time Passwords.

So, in summary, what we are seeing is that despite the sophistication of biometric data security, these systems are hackable by ingenious methods or through the cost-cutting of related technologies like algorithms or a vulnerability created by corrupt third parties or employees.