Smart Glasses for the Visually Impaired with Sensor Fusion

Technical Analysis | 08-05-2025 | By Liam Critchley

Key Takeaways:

- Over 1 billion people globally live with visual impairment, with urban environments posing significant navigation risks due to fast-moving or sudden obstacles.

- A newly developed wearable device combines glasses and a smartphone to deliver real-time obstacle detection using cross-modal sensor fusion and AI.

- The system demonstrated 100% collision avoidance in field trials, with under-320ms end-to-end response time and over 11 hours of operational duration.

- Multi-sensory alerts, which combine auditory and tactile feedback, enhance user awareness in noisy environments and support intuitive navigation.

The World Health Organisation (WHO) has stated that over 1 billion people around the world are either blind or visually impaired in some capacity, enough for the impairment to have an impact on their mobility and safety in everyday life. For many visually impaired people, independent travel is still a challenge today because there are many fast-moving obstacles (and obstacles that suddenly appear) on the streets and roads where people would likely be travelling, with built-up areas and busier places having more obstacles than rural places.

For example, a study identified that the city of Changsha in China has a density of 205 obstructions within a 1.8 km district, while another study in the same city stated that around 40% of visually impaired individuals ended up with serious injuries at least once a year. Correlating data on localised studies such as these shows that there are safety concerns for visually impaired people in busy and built-up environments.

It's become apparent through these kinds of studies that developing obstacle avoidance devices is going to be important for ensuring the safety of visually impaired individuals so that they can safely navigate urban environments independently. Current obstacle avoidance methods of guide dogs and white canes are commonplace, but white canes cannot respond to suddenly appearing objects, and people have to go through strict (and lengthy) selection criteria to obtain a guide dog. For example, in China, there are 17 million visually impaired people, but only around 200 guide dogs are available. These ratios obviously vary from country to country, but show the issues that some people can have based on their geographical location.

With advances in technology, especially sensor hardware and artificial intelligence (AI), there could soon be less of a reliance on traditional obstacle detection methods in lieu of more high-tech devices. There is now the potential to develop advanced obstacle detection systems that use a range of sensor technologies to perceive the local environment (a bit like an autonomous vehicle) and detect the presence of objects in real time.

Ideal Obstacle Detection Wearable Principles

Statistical analyses from the National Federation of the Blind (NFB) show that wearable obstacle avoidance devices (WOADs) should be responsive, reliable, durable, and usable through the combination of software design, hardware design, and user experience.

The devices need to be responsive to rapidly detect potential obstacles and provide feedback in a timely manner so that the wearer can avoid it. Responsiveness is categorised by the end-to-end delay, which is the total time from data collection to alert feedback. Based on human response times, the end-to-end delay for obstacle avoidance should be no longer than 320 ms.

The reliability of a device revolves around both accuracy and robustness, and obstacle avoidance devices need to maintain a 100% collision avoidance rate. This is irrespective of whether the object is moving or stationary and regardless of the detection scenario.

The durability of an obstacle detection device is the length of time that it takes to continuously operate without needing to be recharged. The average life of electronic visual impairment aids is around 5 to 8 hours.

The usability of obstacle avoidance devices stems from the ability to seamlessly integrate into people's lives while enabling the wearer to be more efficient and effective at avoiding obstacles. The weight of these devices should not exceed 500 grams to be comfortable for the wearer.

Challenges with Current Obstacle Avoidance Technologies

It's challenging to develop devices that simultaneously meet all the requirements for responsibility, reliability, durability, and usability. Devices have struggled to meet all the requirements due to issues with sensor technologies, data processing software, and hardware processing capabilities.

Many obstacle avoidance devices integrate different types of sensors into a multi-modal array. However, the heterogeneity in the data formats across the different sensors makes it difficult to process all the data from the sensors into a single and simple format ready for analysis. This reduces the robustness of obstacle detection devices in dynamically changing scenarios.

On the data processing side, processing massive amounts of data from multiple sensors platforms often reduces the responsivity below the required standard. Connecting the data to a laptop also makes for poor usability. One solution being proffered is to use the computational power of smartphones to analyse some of the detection tasks, but efforts to transmit the raw data to date have resulted in significant transmission delays that compromise the reliability of the obstacle avoidance device.

On the hardware side, microcontroller units (MCUs) are the common choice of processor, but they have limited hardware resources that make it difficult for the device to process AI capabilities in real-time. So, more efficient parallel processing hardware is needed to integrate AI analyses into object avoidance devices.

A New Wearable Obstacle Avoidance Device (WOAD) Based on Glasses and a Smartphone

Researchers have now created a WOAD composed of a pair of glasses and a common smartphone. The glasses weighed only 400 grams, 80 grams of which was for the battery, lighter than the weight of a white cane while being a lot more responsive and reliable to the wearer's surroundings.

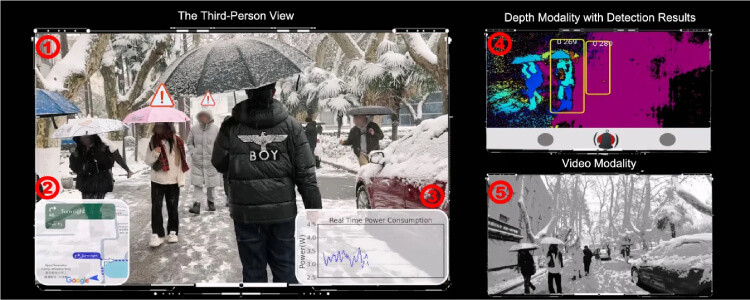

According to field trials involving 12 volunteers with visual impairments, the WOAD glasses demonstrated 100% collision avoidance in real-world urban environments, including complex indoor spaces such as shopping centres and outdoor environments like snowy city streets and poorly lit junctions. This consistent performance across diverse conditions reinforces the device's robustness and dependability in daily use scenarios.

To illustrate the real-world testing scenarios, the following image presents a frame captured during live demonstrations of the WOAD being used in varied indoor and outdoor conditions.

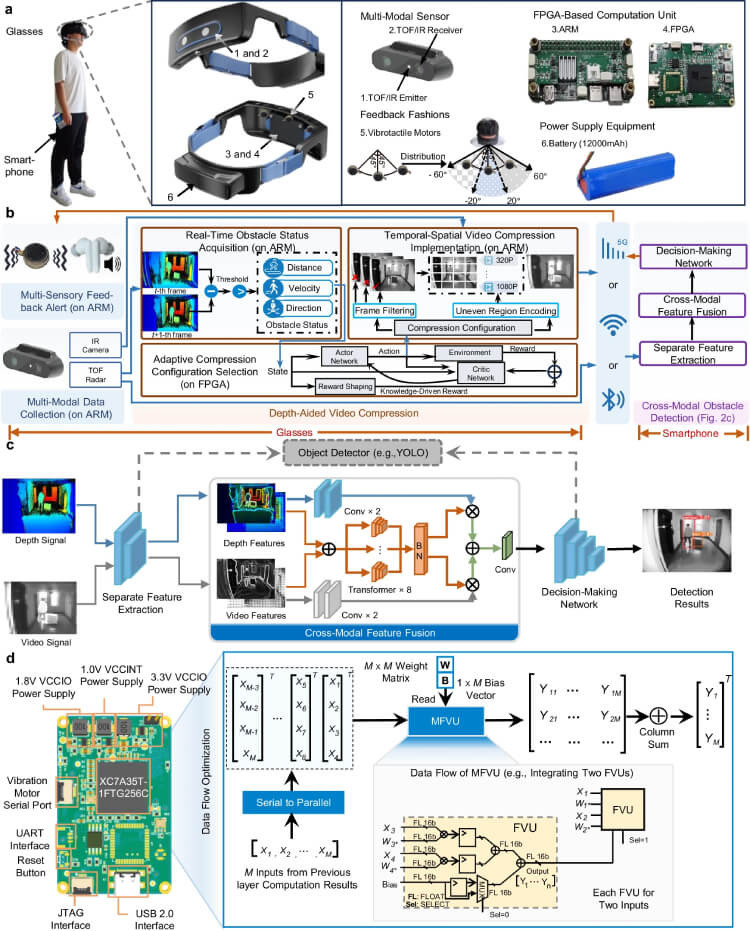

To address challenges with multi-modal sensor data, the smartphone was equipped with a cross-modal obstacle detection module. Other detection devices take the results from the sensors at the outcome level, whereas this module exploits the correlations at the feature level, allowing a wide range of obstacle types to be detected by the different types of sensors.

The cross-modal detection system aligns depth and video data at the feature level, enabling improved accuracy in detecting rapidly appearing or moving objects. Unlike traditional fusion approaches that rely on final output alignment, this method reduces false negatives by integrating multi-modal sensory inputs through a Transformer-based fusion mechanism on the smartphone.

Depth-Aided Compression for Real-Time Responsiveness

On the software side, the glasses were able to capture both depth data and video simultaneously and use a depth-aided video compression module to reduce the transmission delay of the data to the smartphone. This approach increased the responsiveness of the system. The module used here prioritised the visual perception quality and shifted the focus towards task-oriented performance instead of human-oriented perception to improve compression efficiency. The software was also able to use the differences between video frames to adaptively compress the video without losing the obstacle features in the video.

Depth-aided compression leverages obstacle status indicators, such as relative speed and direction extracted from sequential depth maps. These data points inform real-time decisions about video frame filtering and resolution adjustment, optimising for both detection accuracy and transmission efficiency without overloading the mobile processor.

Energy Efficiency Through FPGA and AI Integration

For the hardware, the researchers used a field-programmable gate array (FPGA) computation unit to run the depth-aided video compression module. The FGPA used a multi-float-point vector unit (MFVU) streaming processing architecture to improve the speed of the software and reduce the power consumption of the device. The FGPA optimised the data flow by sequentially processing the data instead of waiting for it all to arrive at once and used an AI model to dynamically adapt the memory resources, by minimising off-chip memory access, to reduce the power consumption and support longer operational durations.

The FPGA implementation features a multi float-point vector unit (MFVU) that processes data streams on-the-fly, significantly reducing latency associated with memory access. By adapting the AI model footprint to available on-chip memory, the system maintains low energy use—achieving an average power consumption of just 3.5W under full operation, well below the 8W threshold for long-term wearability.

The following schematic from WOAD’s original research highlights how the system integrates hardware, depth-aided video compression, and cross-modal AI processing. It maps the flow from sensor data collection to obstacle detection and user feedback via tactile and auditory alerts.

User-Centred Alerts and Real-World Feedback

From a user experience perspective, the researchers integrated a multi-sensory alert system that provided both auditory and tactile responses to alert when there is an obstacle that needs to be avoided. The feedback in the alert system provided information on the direction, distance, and hazard level of the obstacle. The multi-sensory alert system was found to be a lot more effective than a single alert system based on either tactile or auditory feedback.

To minimise cognitive load while enhancing spatial awareness, three vibrotactile motors are positioned across the forehead and temples. Each motor corresponds to a directional field-of-view sector, and vibration frequency varies with obstacle proximity—200Hz for objects within 3 metres and 150Hz beyond—allowing intuitive and silent feedback in noisy urban settings.

After 7 months of continuous use, being trialled by 12 visually impaired and blind volunteers, tests across various indoor and outdoor environments showed that the individuals consistently had a 100% collision avoidance rate. This was across very diverse sensing scenarios, including navigating snowy streets full of pedestrians to low-visibility intersections (less than 50 m visibility at night) with multiple fast-moving cars (moving above 10 m/s), staircases, and malls full of people moving dynamically around each other.

Throughout the trials, the end-to-end delay always remained below 320 ms, even when there were unexpected pedestrian encounters where the pedestrian velocities exceeded 3 m/s within a 1 m visual range. The power consumption didn't exceed 4 W during its operation, resulting in a long-lasting duration of over 11 hours regardless of the environment. The multi-sensory alerts could also be heard by the wearer in streets exceeding 53 db noise levels and 38 db noise levels within malls.

Survey feedback from trial participants revealed that usability and responsiveness received average scores of 4.25 and 4.125 out of 5, respectively. While the device achieved flawless collision avoidance, a small percentage of users noted minor false alerts due to snowflakes and shadows, suggesting a safety-first algorithmic bias that prioritises caution over specificity.

Conclusion: Human-Centred Innovation in Assistive Tech

The work done has shown that more advanced WOADs can be lighter and more beneficial than existing solutions today. The researchers have stated that they plan to improve the appearance of the WOAD in future research using flexible electronics and soft circuit modules to transform the glasses into more fashionable sunglasses so that people would be more likely to wear them day-to-day in public.

Future iterations are expected to incorporate advances in flexible electronics to integrate thinner, lighter circuit elements into a sunglass-style frame. This human-centred design approach aims to normalise assistive technology use in public spaces while maintaining comfort and long battery life.

Reference: