Level 5 AVs: Pipe Dream or Future Reality?

AI | 04-04-2023 | By Paul Whytock

The science fiction image of getting into your autonomous vehicle (AV), telling it your destination and then sitting back and reading a book whilst it does the driving is a fun idea, but the chances of it becoming a reality any time soon is best described as a pipe dream, and there are plenty of reasons why.

Firstly, Internet-connected machine AVs will always be vulnerable to malicious hacking. Secondly, AI cannot fully emulate the human brain when it comes to intelligent decision-making, and the development of more sophisticated AI is being questioned as dangerous by very influential experts. A 2018 research paper published in the journal Nature argues that current AI systems have limitations in achieving human-like cognitive abilities, particularly when it comes to intelligent decision-making, but more on that later in my article.

Specific Geofenced Areas

Thirdly fully autonomous AVs will only work in specific geofenced areas. I’ll explain more on that later as well, and finally, technological systems intended to provide the data required for an AV to function can never be 100% perfect despite whatever stringent validation testing they are subjected to.

Nevertheless, there is an enormous amount of work, debate and hype going on about AVs, and many technology company bean-counters see them as a potentially huge revenue source. Indeed, major semiconductor companies are predicting we will see fully autonomous Level 5 AVs by the end of this decade, which is optimistic at best.

So while on the subject of Level 5 AVs, there is a clearly defined level of classifications for AVs. They range from elementary Level 1 through to Level 5. These levels are internationally recognised by automotive societies and institutes worldwide, but what do they all mean?

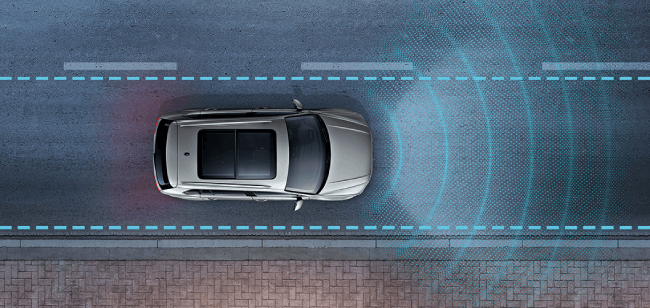

Level 1 autonomous vehicles must have a driver in control of them at all times. The partially automated systems can include brakes, lane positioning, cruise control and forward vehicle distance monitoring. This is a relatively basic definition of autonomy, and many cars have these facilities.

This includes my car, which has lane-centring automated control and distance monitoring of the vehicle or object in front. Let me say right now, I disabled my lane monitoring control after my car dangerously decided that it wanted to move me away from the white line in the centre of the road and steered me towards a row of cars parked in the road. As a mere human, I decided this was not the right option and steered back towards the white centre line in the road.

Worldwide Software Compatibility

Now if you take this one simple error and think about it on a global basis, the question is how will a supposedly autonomous car’s software deal with similar situations where every country has different road marking designs, different parking regulations and different dimensioned roads. AI may be bright, but it cannot think and make decisions, so total automation in these circumstances would be a dangerous risk.

AI is probably the single biggest failing point in the drive to fully autonomous cars. It is only as good as the data it is fed, and that data can vary enormously depending on where, when and how the data was initially harvested. Currently, AI is no more than a “monkey sees, monkey does” innovation. More than 1,000 AI experts, researchers, and backers signed an open letter in 2015 calling for an immediate pause on the creation of large AI systems to allow for the proper study of their capabilities and dangers, as reported by The Guardian.

AI’s neural networks and simulated binary systems do not include any clearly defined and validated “if X happens, then do Y” programming, and whilst this situation exists, AI can never replicate the decision-making power of the human brain.

Some may argue that AI will become more self-intelligent, but only recently have prominent industry figures called for extreme caution on how AI development progresses. More than 1,000 artificial intelligence experts, researchers and backers have joined a call for an immediate pause on the creation of large AIs for at least six months so the capabilities and dangers of systems such as GPT-4 can be properly studied.

This request was made in an open letter signed by major AI players, including renowned scientist Stephen Hawking and Elon Musk, who co-founded OpenAI, the research lab responsible for ChatGPT and GPT-4, Emad Mostaque, who founded London-based Stability AI and Steve Wozniak, the co-founder of Apple. In addition to this, Italy has just become the first European country to ChatGPT over privacy concerns.

At level 2, the driver is also in control of the car from start to end of the journey. However, the partly automated driving support system is limited to areas like brake and acceleration, lane centring and adaptive cruise control. An example of level 2 technology is Tesla’s Autopilot function. A Reuters news article from 2019 reported on a lawsuit filed against Tesla after a driver of one of its Model S cars collided with a truck on a Florida motorway and died after initiating the car’s autopilot.

When it comes to that last item, there are already well-documented incidences in America where Tesla vehicles have killed occupants whilst under the control of the Autopilot function. Three years ago, Tesla was sued after a driver of one of its Model S cars collided with a truck on a Florida motorway and died after initiating the car’s autopilot. In another incident, a Tesla vehicle drove over a curb and hit a brick wall killing the driver. Clearly, Level 2 vehicles can only operate under constant human supervision.

Level 3 AV classification says the driver is no longer responsible for driving the car when the automated driving facilities are in operation, but the driver must reassume responsibility for handling the vehicle when the features request it. In other words, when the car cannot cope with the information being fed into its software-enabled system, and it calls for human intervention. An article from The Verge reports on Honda’s semi-autonomous Legend sedan, which is currently the world’s only certified Level 3 autonomous vehicle.

Split-second Accident Avoidance

The question here is whether the driver will be notified quickly enough when the vehicle recognises the problem. Decisions regarding accident avoidance take split-second thinking, and although humans are by no means perfect in this scenario, their brains are much better at making such judgements than a car’s software.

It is expected that Levels 3 and 4 would generate about 1.8 Terabyte/hour of data as it analyse road situations, and given that split-second decisions need to be made to avoid accidents, all this data would not have time to be uploaded to the cloud, and an analysed decision relayed back to the car instructing it on how to avoid the accident. So cars would have to be capable of constantly processing/analysing their own vast amounts of data, which in itself creates a substantial design challenge.

While on the subject of Level 3 cars, Honda’s semi-autonomous Legend sedan is the world’s only certified Level 3 AV. The car’s Traffic Jam Pilot system can control acceleration, braking and steering under certain conditions, and Honda says that drivers and passengers could relax in these circumstances and watch a movie. It can alert the driver to respond when handing over control, and if the driver continues to be unresponsive, the system will assist with an emergency stop by decelerating and stopping the vehicle while alerting surrounding cars with hazard lights and the horn.

Imagine an emergency stop happening on the fast lane of a busy M25 motorway. Knowing that could possibly happen, I’m not convinced drivers or passengers would have a particularly relaxing time while watching a movie, and I definitely would not recommend Sandra Bullock’s film entitled “Speed.”

So AVs with level 1 through 3 autonomy need a human driver and are therefore described as semi-autonomous vehicles and typically contain a variety of automated add-ons known as Advanced Driver Assistance Systems. However, I question the word “advanced” in this context when ultimately, control of the vehicle is decided by the really advanced system of the human brain.

Restricted Operational Areas

Starting at level 4, we can speak of fully autonomous cars. At this level, the driver is not responsible for driving the vehicle or expected to take over when the automated driving features are in use. However, for level 4, these automated features can only be used in specific geofenced areas.

You may well ask, what is a specific geofenced area? Basically, it’s an area that has been mapped in detail using LIDAR and other sensing techniques. It’s a specific area where Level 4 autonomous cars can operate. In other words, it’s an operational restriction and, in some ways, relates to my earlier comments that all countries have such different road systems that there will never be a globally capable autonomous world car.

Take, for example, Milton Keynes. Its road network is based on a grid and would be simple to map and classify as a Level 4 specific geofenced area but ask that car to drive around Piccadilly Circus or the M6 Spaghetti Junction, and mayhem would ensue.

And so we come to Level 5 classification, the Holy Grail of autonomous car design. These do not exist, but if they ever do would be fully autonomous where human intervention other than telling the car its final destination would not be required.

So to answer the headline question of "will there ever be a truly Level 5 autonomous vehicle" it’s impossible to say never. After all, 100 years ago, any scientist predicting the invention of the Worldwide Web would undoubtedly have been considered stark-raving mad.

So perhaps the real question is when will we see them? Despite all the optimistic hyperbole from organisations that may have a self-interested investment in their successful development, I think anyone suggesting they will happen before 2030 is way off the mark.

I also don’t believe a fully autonomous Level 5 globally-enabled vehicle will ever be created. Remember those “specific geofenced areas.” In my view, Level 5 AVs will be created but will only operate in defined areas, and if you don’t live in one, then when it comes to watching a movie or reading a book while you are transported to your destination, maybe trains, planes and taxis will remain your best bet.