What is Edge Computing? The Future of Real-Time Data Processing

Insights | 03-10-2023 | By Gary Elinoff

Often heralded as the future of real-time data processing, edge computing allows for local evaluation and action, showcasing the difference between edge and cloud computing rather than having to go up an electronic chain of command for permissions. Its analogy, in human terms, is an organization that allows a local manager to make observations and take actions on his own based on what he sees. The local manager does communicate with upper management, but not in every detail.

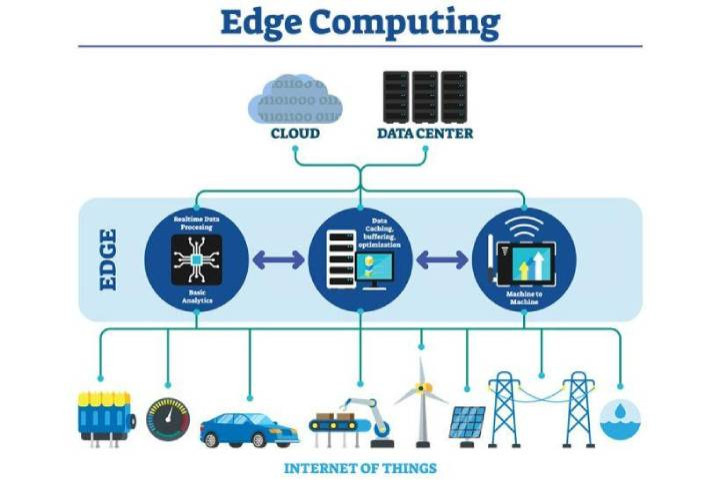

As described by IBM2 “Edge computing is a distributed computing framework that brings enterprise applications closer to data sources such as IoT devices or local edge servers. This proximity to data at its source can deliver strong business benefits, including faster insights, improved response times and better bandwidth availability.”

As we shall see, edge computing also depends on a range of closely related modalities, including the internet of things, server programs and cloud computing to make it possible.

The Internet of Things

The Internet of Things (IoT) consists of any number of nodes, with each node consisting largely of sensors and actuators; the latter are devices to accomplish physical purposes, such as raising or lowering pressure in a boiler. These nodes represent the most important part of the IoT because these nodes are the vital point where “the rubber meets the road” – the physical interface between our electronic system and the real-world events that we need to monitor and control. These nodes must communicate with the controlling computer over the internet, so thinking of each node as a “thing” begat the term internet of things.

Based on the reading of the sensors, the pressure is controlled. But how and where is the decision on the correct pressure made?

Top-Down Decision Making and Cloud Computing

In a classical system, the node’s sensors transmit the sensor data up the electronic chain of command to a central computer that decides, based on sensor data, what the correct pressure should be. The decision is transmitted back down the chain of command to the actuator, which has been held in abeyance until this point.

An operation of this sort can be implemented via cloud computing, which means that communication between the node and a central system can be over the cloud (the internet). However, as Intel3 points out, “Cloud computing is being pushed to its limits by the needs of the services and applications it supports.”

The Transformative Impact of Edge Computing

According to IEEE, traditional cloud computing faces challenges such as data security threats, performance issues, and rising operational costs. Edge computing addresses these challenges by decentralising data processing, thereby reducing dependence on the cloud.

Edge computing, beyond its theoretical foundations, has tangible benefits and applications across industries, from healthcare to logistics, underscoring its transformative impact. For instance, in the logistics sector, edge computing plays a pivotal role in optimizing supply chains. As highlighted by DHL, edge computing can facilitate real-time tracking, predictive maintenance, and even autonomous vehicles in logistics, leading to more efficient and cost-effective operations.

For instance, in smart cities, edge computing aids in real-time traffic management, reducing congestion and improving transportation efficiency. In healthcare, it enables real-time patient monitoring, ensuring timely medical interventions.

As we shall see, edge computing also depends on a range of closely related modalities, including the Internet of Things, server programs and cloud computing, to make it possible.

IBM highlights how edge computing's proximity to data sources ensures quicker insights, better response times, and enhanced bandwidth, essential for real-time applications.

Edge Computing Adds an Additional Element

A node in a system based on edge computing has an additional decision-making element, such as a microprocessor, to decide locally what the pressure should be. While the local node makes its decision based on guide posts it previously received from on up and perhaps from other nodes on the system, the major decision-making takes place locally, far faster than a system that is micromanaged from afar. But the benefits don’t end there.

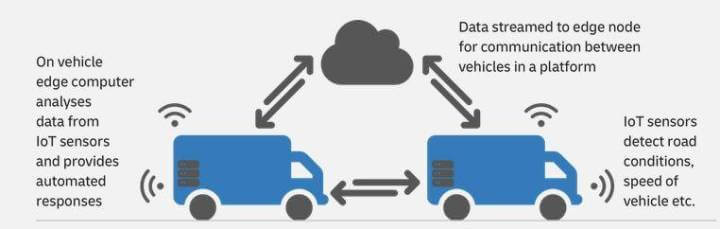

Edge computing can enable “platooning” of autonomous vehicles. In the example below, DHL illustrates how multiple trucks can travel with only one driver.

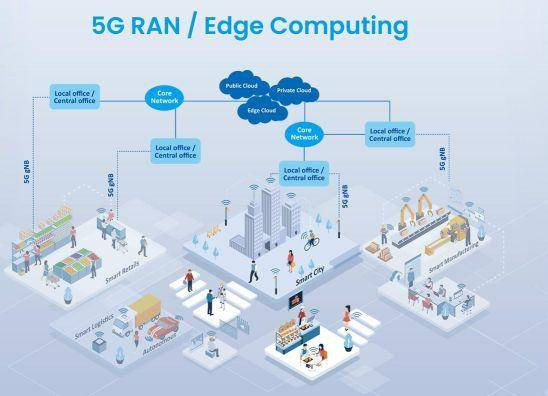

Another compelling use case of edge computing is in the realm of 5G technology. As Mitac points out, edge computing is integral to the deployment of 5G RAN (Radio Access Network). By processing data closer to the source, edge computing ensures that 5G networks can handle vast amounts of data with minimal latency, paving the way for innovations like smart cities and advanced IoT applications.

Edge computing offers numerous advantages, including increased data security, enhanced application performance, reduced operational costs, and improved business efficiency. It also offers unlimited scalability, conserves network and computing resources, and significantly reduces latency, as highlighted by IEEE.

Real-Life Use Cases for Edge Computing. Image source: DHL4

In this example, multiple trucks will travel closely following one another, enabling savings in fuel costs and decreasing congestion. Through edge computing, the need for a driver for each separate truck is eliminated; just one will be required in the first truck, and the subsequent vehicles will be able to communicate with each other with ultra-low latency.

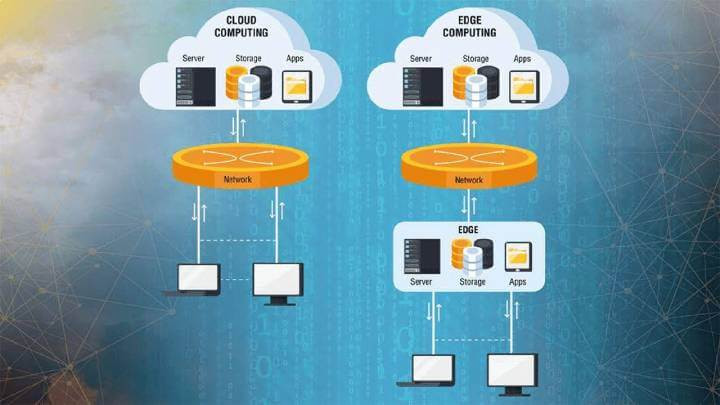

Cloud Computing as it relates to Edge Computing

OK, so now we know that cloud computing requires constant communications with remote computers. We’ve also learned that those “computers” are often server programs that reside in data centers. These data centers are often located thousands of miles away, often in areas where electricity is cheap, and the climate is cold, so the amount of energy required for cooling is minimized. It is readily apparent that edge computing and cloud computing go hand-in-hand because, under a regime of edge computing, most decisions are made locally. This vastly cuts down on the volume of communication needed between the local IoT nodes and the server.

Edge computing contrasted with cloud computing. Image source: iebmedia5

Hewlett Packard describes this paradigm as “the modern edge-to-cloud environment”6, with most decisions being made locally. This vastly cuts down on the volume of communication needed over the cloud between the local IoT nodes and the server. This has the benefit of reduction of latency, relief to the internet, and less demands on the servers.

An essential component of cloud computing is the server. But what exactly is a server?

What is a Server?

OK, now we know that each node has local decision-making power, and each node communicates with other nodes and with a central controlling system. But, this system might not actually be a unique computer but is more likely to be a program known as a server.

A server is a computer program dedicated to a specific task, such as our IoT system. The server typically runs on a system of computers that are part of what is often defined as a data center. This data center is typically the host of many servers dedicated to any number of processes, which can be totally independent.

The purpose of data centers is flexibility and economy. Our IoT system’s server will require differing amounts of computational power based on how active it is at any given time. The same thing is true for all the other servers resident within the data center. For example, one server may be dedicated to a medical system that analyzes MRI results. Another might host accounting programs, and the list goes on.

The central point is that each server substitutes for a single dedicated computer that must otherwise be powerful enough to handle the maximum amount of computational resources that, for example, the medical system or the accounting system may require.

Edge computing will make smart cities possible. Image source: Mitac7

The beauty of a server system is that it is configured to handle the combined average of the sum of the server programs requirements. This represents an enormous savings in required total computational resources. And data centers are flexible. As the average computational power required by the totality of the servers increases, the specialized operators of the data centers can conveniently add computational power as it is needed.

The bottom line is that our IoT system does not need to acquire computers that can serve it at its maximum need. Rather, it can rent remote computer power and pay for it as needed. As an analogy, a given home might need at its maximum 10 kilowatts but usually only uses 1 kilowatt. It doesn’t pay for the entire 10 kilowatts all the time but is billed for whatever amount of power it requires at the times it is needed.

Other benefits of the edge-to-cloud environment include a reduction of latency, cloud bandwidth savings and reduced power requirements.

Reduction of Latency

Latency, the delay between a sensor's observation and the subsequent action, is significantly reduced with edge computing. If a node sensor’s output has to be transmitted over the internet to a distant location and then a decision must travel back to the node, precious time is lost because while the speed of light is 186,000 miles per second, information travels back and forth over the internet at considerably lower speed. Most of this back-and-forth exchange of information over thousands of miles is avoided with edge computing.

Internet Bandwidth

The amount of information that the internet can carry is called its bandwidth, and while it is huge, there are limits. And because there are countless millions of devices on the IoT, if every single decision required by each IoT edge point required back-and-forth communication, the bandwidth would be challenged. This would result in slower communication, resulting in worsened latency.

Conserving Server Power Requirements

Computer servers are estimated to consume a surprising 2% to 5% of the world’s total electrical power. Even if it were possible for each of the innumerable IoT devices to refer to servers for every decision, the power requirements of the servers would be unsustainable.

Wrapping Up

Hewlett Packard's perspective on edge computing underscores its symbiotic relationship with cloud computing. Termed as the "edge-to-cloud environment," this paradigm shift ensures that while most decisions are made locally at the edge, the cloud still plays a role in storage, analytics, and broader decision-making processes. Such a setup not only reduces latency but also conserves network bandwidth and minimizes server power requirements, leading to a more sustainable and efficient digital ecosystem.

The fusion of edge and cloud computing is paving the way for a more connected, efficient, and responsive digital world. As technology continues to evolve, edge computing will undoubtedly play a pivotal role in shaping the future of real-time data processing and IoT applications.

Challenges and Opportunities

While edge computing offers numerous advantages, it's not without challenges. The decentralized nature can sometimes lead to security concerns, and the initial setup cost for edge infrastructure can be high. Moreover, managing multiple edge devices can become complex, requiring robust management solutions.

It's essential for industries to strike a balance between leveraging the benefits of edge computing and addressing its challenges. With continuous advancements and innovations, the potential of edge computing is vast, promising a transformative impact across various sectors.

Edge computing enables real-time monitoring of industrial enterprises, smart grids, and the maintenance of oil and gas pipelines, enhancing safety and cutting costs. Edge computing makes all of this and more possible because without local control, power and data transmission requirements would be unsustainable, and huge latencies would make on-time decision-making impossible.

Manufacturers are keen on this, and companies worldwide are striving to outdo each other in designing silicon systems on a chip (SOC) that combines the function of monitor, actuator and communication installed at the node, making edge computing simpler, cheaper and even easier to design.

However, the most important takeaway is that just as human organizations work better when operators on the ground make most of the decisions, exactly the same thing can be said for digital systems.

References:

1. Real-Life Use Cases for Edge Computing - IEEE

2. What is edge computing? - IBM

3. What is Edge Computing – Intel

4. Trend Overview - DHL

5. Edge computing key driver for wider IoT deployments - iebmedia

6. Edge to Cloud – Hewlett Packard

7. 5G RAN / EDGE Computing – Mitac

Glossary

5G RAN: 5G radio access network

Bandwidth: The amount of information the cloud can carry.

Cloud Computing: is the provision of flexible computing capacity available to a myriad of users located anywhere on the internet.

Data Center: A data center is a physical facility that houses a large array of computational resources that serves as a hub for cloud computing operations.

Internet of Things: A computer network that connects individual subsystems of sensors and devices to other such subsystems located anywhere in the world. This arrangement allows them to communicate with each other to accomplish overall goals.

Server: Often part of a data center, a server is a computer that communicates with other digitally-based systems over a network.