The Computer Bounce Effect

| 09-04-2020 | By Robin Mitchell

Since their conception computers have undergone many historic changes including their architecture, capabilities, and location. One effect in particular is the constantly shifting location of computation with users switching between the use of a centralised computer and local devices. In this article we will look more into this effect, why it has happened, and where it could lead to.

The First Computers – Origins

While computing machines date back as far as the 19th century with a prime example being Charles Babbage's Difference Machine modern computers only came into the commercial scene during the late 1940’s with the introduction of ENIAC; the worlds first electronic computational machine (however, the WW2 British built colossus was also an electronic computer but was more specialised to one specific task). These first computers consisted of many thousands of valves and/or relays with monumental switch boards for programming, and thousands of miles of electrical cabling to connect it all together. Due to their expensive nature and the general lack of understanding as to what computers could be capable of doing, their use was often limited to either scientific research, military computation, or other governmental related problems (such as a census).

As decades went by improvements in electronics saw valves replaced with transistors and PCBs replacing racks which lead to a dramatic improvement in computational performance. However, even the simplest computers were still too large and expensive to be owned and operated by individuals which lead to multiple users having to access a large central computer with the use of terminals simultaneously. Users could design their own programs and algorithms, transcribe them to machine code, wait for their allotted computer time, upload their program, and wait for the results. The introduction of the integrated circuit allowed for more complex circuitry and therefore increased computational power but the real paradigm shift wouldn’t occur until Intel introduced the 4004 CPU.

The First Bounce – The 80’s

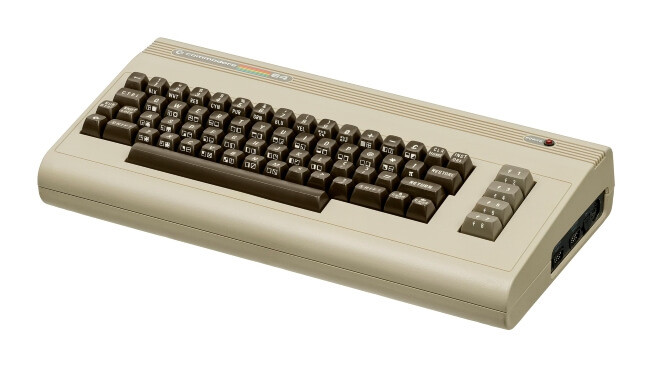

The introduction of the Intel 4004 CPU changed how the world approached computing. Instead of computers being constructed from discrete glue logic, they could instead be built around a generic low cost CPU with variable amounts of ROM, RAM, and peripherals. While such CPUs had specific bus protocols for accessing external memory and I/O the arrangement of these as well as how those I/O devices operated was left entirely to the designer. This lead to a wave of machines such as the ZX Spectrum, BBC Micro, and Commodore 64 that all offered different types of computational capabilities and applications.

A Commodore 64, an 8-bit home computer introduced in 1982 by Commodore International.

While these processing capabilities of these computers was miniscule by comparison to the large mainframes produced by companies such as IBM, they were able to perform the vast majority of applications used by most homeowners including word processing, spreadsheets, programming, and gaming. The use of such microcomputers saw computing move from large timeshare mainframes to the home which was the first bounce in computing.

The Second Bound – The Internet Age

With home computers becoming ever more powerful and their practical uses increasing it would not be long before the 90’s saw the introduction of the Internet. Suddenly, machines which would operate in total isolation could suddenly start talking to each other via telephone lines with the use of dial-up modems. As more and more computers became internet capable ISPs around the world improved their infrastructure to handle more traffic with dial-up being replaced with broadband which is now being replaced with fibre. But not only did wired technologies improve but so did radio technologies with Wi-Fi and Bluetooth in particular being critical to the next computation bounce.

The reduction of microcontroller costs coupled with high-speed internet and radio technologies saw designers begin to integrate internet technologies into simple devices. Instead of just large desktop computers having internet capabilities devices such as light bulbs, door bells, and even toasters were having the capability to send and get request over the internet; this was the birth of the Internet of Things or IoT. While IoT devices allowed for remote monitoring and control of devices they also opened up a whole new opportunity for designers in the AI field as these simple devices numbered in the millions all producing valuable data. It would not be long before companies such as Google and Amazon constructed mainframes dedicated to gathering data for use in improving AI algorithms that could provide users with a whole new experience. Suddenly, simple IoT devices could use their internet connection to request complex AI processing on data that they have gathered and then be returned the result in a near identical fashion to the first computers whereby users would use time-sharing on a mainframe. One common example of this would be the Amazon Echo which does not contain the processing power needed for accurate speech-to-text but instead relies on cloud computing to process the speech and execute the spoken command.

But the concept of cloud computing goes far beyond IoT devices relying on a remote computer for data processing; even everyday applications are also moving back to data-centres. Online file storage allows for multiple devices to access the same file from any location while online office suites allow for word processing, spreadsheets, and presentations to be developed on any platform that has browser capabilities. This computational shift from personal devices back to mainframes and data-centres demonstrates the second computer bounce.

The Third Bounce – Edge Computing

The introduction of cloud computing demonstrated to users the convenience of remote computing and how computer specifications made little difference when operating those resources. So long as a device had an internet connection, some rudimentary computational power, and minimal graphics capability that machine could access online videos, write online documents, and access data-centres for executing AI algorithms. Not only were these services cross-platform, but the vast majority of these were also free, making them incredibly popular too. However, it would not be long before users began questioning the services and wondering if it was too good to be true. Surely enough, evidence began to surface that cloud service providers were in fact gathering data on all users as well as creating profiles around them. These profiles would often include sensitive information such as names, date of birth, residential address, search history, and websites visited. This information would then be used to track users online as well as create targeted advertisements which would generate revenue for the cloud service provider. As expected, the public did not feel comfortable with the concept of their personal information being gathered in exchange for access to free services.

This privacy concern was amplified when the general public became aware of massive insecurities in IoT devices. The first IoT devices often handled data (such as temperature and humidity) which in itself was considered benign (i.e. insensitive and impersonal). As a result, these devices did not implement strong security features such as encrypted communications, unique passwords, and secure booting methods. However, as IoT devices increased in complexity the data which they gathered became sensitive in nature with some examples including recorded conversation and camera imagery. But designers made little (if any at all), security changes leaving millions of devices with internet capabilities at risk for remote attacks by cybercriminals.

To the surprise of no one, hackers began to create malware specifically targeted at IoT devices to either gather data on the users, perform DDoS attacks, and perform cryptocurrency mining with one example being the Mirai worm. Another example of how these IoT devices presented serious security threats was when a hacker (or hackers), gained entry into a casinos aquarium IoT thermometer and then used it to gain access to the local servers. From there, personal details from high-rollers were obtained leaving those individuals at risk of theft or blackmail. The risk that insecure IoT devices posed leads to a movement by the public, engineers, and governments to bring in regulation that would outlaw such devices. This is where the third bounce would commence.

The desire for secure devices with privacy in mind saw designers take a new approach to where data processing should be performed and how those devices should handle sensitive information. Improvement in silicon technology not only saw microcontrollers become more advanced but also saw the introduction of dedicated hardware security with true random number generators, on-chip encryption, secure booting methods to ensure authentic firmware, and secure storage. Hardware security measures would help to strengthen the device locally but not remotely as users data would still be streamed to the cloud for storage and processing. Therefore, designers questioned how much of the gathered data could be processed locally and how little data the device could stream while providing useful services. Thus the concept of edge computing was born whereby device would process their own data locally and react to it while reducing the need for a remote machine to perform computational tasks.

One of the biggest tasks that IoT devices have processed remotely is AI but the introduction of AI on silicon meant that microcontrollers could download AI neural nets from a cloud-based service and then run the AI algorithms locally (either partially or fully). Another key concept that designers have started to experiment with is the concept of data obscurification whereby sensitive data is partly operated on before being sent to a cloud-based service for further processing. The data is still usable for use in AI deep learning but not enough that raw sensitive information can be retrieved. This shift from cloud-based computing back to the local device is the third bounce of computational location.

The Future

Predicting how future computer will operate is a complex task as so many technologies are being developed daily. Computers of the future may implement light as a data transfer medium with PCBs having both copper and light layers or could implement quantum processors for handling large parallel tasks such as search and find functions. If the bouncing trend continues then data processing will head back to large dedicated computers but where these computers will be is unclear. One possibility is that households have their own personal mainframe which houses all user files and applications, allows for remote computing from anywhere around the world, and hosts their own personal cloud functions. These systems would run their own AI algorithm and gather data on the users to learn from but since the computer is the personal property of the homeowners the gathering of personal data would be less of a concern. This system could also provide a source of revenue with homeowners being able to link their micro-mainframes to cloud services and either provide computational power to the globe or sell their personal data for larger systems to learn from.

However, one thing that can be said for sure, this decade will see privacy and security at the centre of computing.