Whitepaper: Using Radar and Vision Fusion for Improved Low-light Pedestrian Detection

28-03-2024 | By Jack Pollard

Radar and vision fusion is setting a new standard for automotive safety, particularly in challenging low-light conditions. In this whitepaper Dr. Peter Gulden and Dr. Zorawar Bassi of indie Semiconductor unveil ground breaking research on enhancing pedestrian and bicycle detection at night. By integrating radar with vision sensors through innovative low-cost processing solutions, this work presents a leap forward in making advanced safety features accessible for mass-market vehicles.

Abstract

Using multiple sensor modalities is becoming a critical design requirement in automotive safety systems. The reasons are multi-fold, such as having redundancy, robustness to adverse weather conditions, challenging environment scenes, and improved accuracy due to complementary features. The placement and interaction, or fusion, of these sensors, can vary widely. We present some results from combining radar and image (vision) sensors, using low-cost processors that can be placed in various locations throughout the vehicle. This could be at the edge or in some zonal configuration intermediate to a centre topology. The sensors are fused for object detection, which is far more robust under various environmental conditions, than if a single modality was used. Further, the unique indie approach for combining several radars leading in a cooperative way to enhance the number of detection points and full vectoral velocity capability is showcased. Our focus for this investigation was pedestrian and bicycle detection in an automotive backup scenario, under low light conditions. While sensor fusion has required powerful computing resources to-date, we show how vision and radar fusion can be accomplished with modest processing power to help bring the safety benefits of multi-modal sensing to mass market vehicles.

Introduction

Today’s vehicles rely on numerous sensors for safety reasons, as well as to accommodate various levels of automation; ultimately this automation is also aimed at increasing safety and providing a more efficient driving experience. Camera image sensors are one of the most widely used types of sensors, given that they most closely resemble human vision – vision being the most fundamental human capacity used for driving. Like human vision, cameras can be easily impaired, whether due to challenging environmental conditions, such as low light or fog, or simply because the lens may be obstructed by dirt. Hence cameras are often paired with other types of complimentary sensors, such as radar, which are immune to those conditions that can impair a camera. Radar sensors have their own strengths over image sensors, such as providing accurate distance and velocity data. The fusion of a camera with radar can enhance a safety system by improving accuracy and robustness, as well as providing redundancy.

Perhaps the most widely used camera-based safety system, and one of the first to be mandated by regulations, is the backup camera. As the development and funding of advanced vehicle AI systems, with the goal of full autonomy, has greatly increased over the last decade, the simple backup camera continues to be the most widely used and available safety system. Its simplicity allows it to be understood and adopted by drivers of all generations. In recent years, the backup camera has expanded to include smarts, or some level of AI, where it can detect objects in its view, which in turn can signal an emergency braking feature. Adding smarts, where the camera provides a decision or ‘opinion’ on safety, as opposed to simply a picture, greatly increases the need for accuracy and robustness. This is even more true in tough environment conditions (night time), where a driver’s own vision may be challenged. Being a relatively simple safety system to be fitted inconspicuously in a compact area, a backup camera is required to be low cost, low power, and small size. These requirements often conflict with the smart features, which tend to necessitate large sensors and additional processing ICs. The conflict is exasperated by the requirement of high accuracy and robustness, which in turn needs more ‘smarts’, i.e., more processing power.

In our paper, we return to this most fundamental safety system, the backup camera, with some basic smarts, though our results are equally applicable to any “smart” viewing camera around the vehicle (e.g. a side mirror camera system). Such smart backup cameras have been available for some time, however, their performance under tough environmental conditions, given their low cost, is often suboptimal, limiting the features (such as audio warning, and emergency braking) that can be activated. We study these cameras, with the smarts of detecting pedestrians and bicycles, under low light (<10 lux), where a human may also have difficulty detecting. We then combine the camera with a radar sensor using a simple fusion approach and show how performance is improved. Extensive work has been done on multi-sensor fusion, including camera and radar fusion. The majority of work focuses on the algorithm and architecture, often using complex machine learning models more fit for high autonomy, without consideration of hardware implementation and cost. A key objective here was to keep the system cost low, keeping in mind the low to mid-priced vehicle. Hence our approach is such that all processing can be done on the integrated processor inside the camera, no additional processors are needed for the fusion. Aside from the camera, the only additional hardware is the radar front end. As the trend for vehicles to make use of multiple sensor types grows, many are also including radar systems, such as corner radars. We make use of the same data from any existing vehicle radar system, so as not to add a radar specific for the smart backup application and keep the cost low. Our overall pipeline has components similar to, however, those authors are focused on a general framework, treating the camera and radar as independent detectors, making use of a more complex probability model for fusion, independent of any hardware considerations, whereas we start with a small footprint device, and build our architecture accordingly, taking only the vision system as the detector. We also present a new approach for combining several radars leading in a cooperative way to enhance the number of detection points as well as provide full vectoral velocity. The increased detections and full velocity can improve the radar clustering and in turn the fused detection.

Our paper is organised as follows. We begin with a discussion of the camera system, in particular the processor inside the camera on which all computation is done. The right processor and choice of algorithms are critical to keeping system cost/power/size low. Next, we present our camera smarts, namely the vision-based pedestrian and bicycle detector. This is followed by a discussion of the radar processing pipeline in the section, “Radar Pipeline and Clustering”. Our fusion approach is outlined in section, “Simple Fusion Algorithm”, and the results of the fusion showing improved performance are presented in section, “Experiments and Results”. “Cooperative Radar” section covers our unique radar offerings, followed by conclusions and future improvements.

Camera Processor

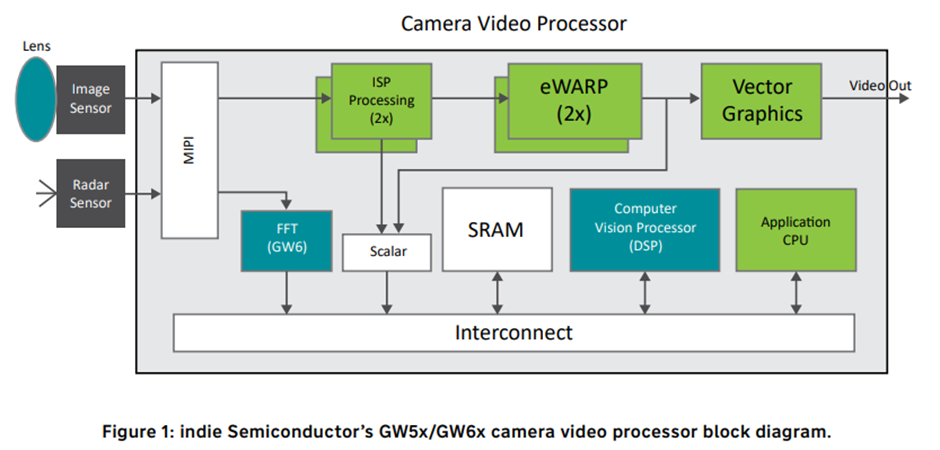

The standard backup camera consists of a lens, an image sensor, and some form of a system-on-chip (SoC), which we call the camera processor (see Figure 1). The camera processor receives raw data from the image sensor and performs various functions to output a live video stream. indie Semiconductor offers several advanced camera video processors geared towards the automotive industry, and for the purposes of this paper, we have worked with the GW5x SoC and GW6x SoC (currently in development), product lines (see [3] for more information). These processors are highly efficient, low power SoCs, targeted for exterior automotive cameras. Figure 1 shows key internal blocks.

The GW5x does not include the FFT block, all other blocks are common to both GW. x and GW6x, with variations in features and performance. Raw data from the image sensor comes in through the MIPI interface, it is processed by the image sensor processing (ISP) block (this does de-Bayer, noise reduction, HDR, auto-exposure, gamma, etc.) to create RGB data. The eWARP block is a custom warping engine, which can apply complex geometric transformations to the video, followed by any OSD/drawing through the vector graphics, lastly, the video is output. Internal static random-access memory (SRAM) which ranges between 3 and 6 Mbytes, fulfils all system memory needs. At various points frame(s) can be grabbed, scaled (Scalar) and written to memory. The Computer Vision Processor is a highly parallelised, Single Instruction/Multiple Data (SIMD) type, DSP block, which performs all the computer vision processing, like object detection. A main processor, the Application CPU, performs the control functions, and can also be used for running algorithms. A second MIPI interface allows taking in a raw radar signal, which is processed through a hardware FFT block (in GW6x), the radar cube is then written to SRAM for additional processing by the Computer Vision Processor. The GW6x family includes 2x independent copies of the ISP and eWARP blocks, this allows independent ISP tuning and geometry transforms for the viewing video stream, and the video used for computer vision processing.

The indie processors have a small footprint in all aspects, with a size of ~10 x 10 mm and power ranging from 0.5 to 1.0 W, depending on resolution and features enabled. This makes them ideal for camera solutions on the edge. With the ability to take in a second input, of possibly different modality, they can also support specific fusion applications. All our software algorithms for this project were run either on the GW5x IC, or on the bit accurate simulator for the upcoming GW6x IC. The GW6x family also has a configuration with external DDR support, however we chose not to enable that configuration in order to keep the footprint minimal – our development did not rely on any external memory.

To continue reading the full whitepaper for free, click HERE.